SudoQ - a quantum variant of the popular game

Together with Jordi Pillet, who was doing his master’s internship last year at the LPT, we have a new paper, introducing a quantum version of the Sudoku game.

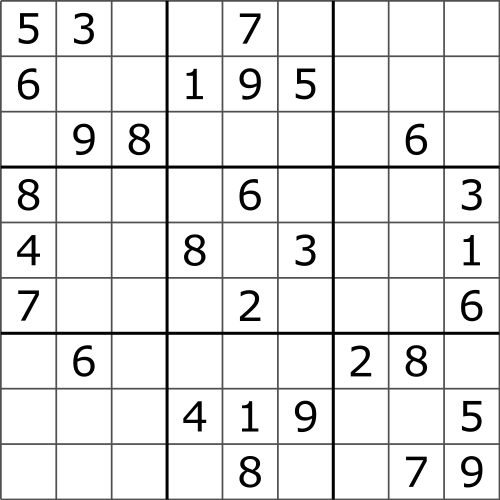

Sudoku puzzles can now be found in many newspapers and have become part of the popular culture. Numerous mathematical results have been proved about Sudoku, most of them dealing with the enumeration of puzzles having different properties. Importantly, it has been shown that the minimum number of clues (or givens, or non empty cells) in any proper Sudoku puzzle is 17.

With SudoQ, we quantize the usual grid and rules. Quantizing the grid means that the cells will hold pure quantum states instead of numbers. Quantizing the rules means that the requirement that rows/columns/sub-squares contain all the numbers exactly once is replaced by the requirement that the corresponding vectors (quantum states) form an orthonormal basis of the Hilbert space. This procedure was used previously for quantizing Latin squares.

We study the SudoQ problem, focusing on the number of classical and quantum solutions, conjecturing that classical grids which are classically unsolvable are also unsolvable as SudoQ grids. We also conjecture that a classical grid which has a unique classical solution (a so-called proper grid) does not have any other quantum solutions. However, we have found that grids having two or more classical solutions can have purely quantum solutions. Also, SudoQ grids can approximate better classically unsolvable puzzles.

A SudoQ puzzle solver, based on Sinkhorn alternating minimization, is described, and a python implementation is provided. The idea of the algorithm is very simple: for a $n^2 \times n^2$ grid, run through the $3n$ constraints (corresponding to row, column, and sub-square normalizations) and apply a Gram-Schmidt procedure to ensure that the corresponding vectors form an orthonormal basis of $\mathbb C^{n^2}$. When doing this, we have to have to make sure to keep the given clues fixed, which amounts to performing the Gram-Schmidt normalization on a subspace. We tested the algorithm on many grids and we have good empirical results, but we lack a proof of convergence in general situations.